The world record for fastest official single Rubik’s Cube solve is 4.69 seconds, set by Patrick Ponce earlier this month (edging out perennial champion Feliks Zemdegs at 4.73 seconds). That’s brain-meltingly fast, but the robot called Sub1 Reloaded holds the robot record at 0.637 seconds.

Holy shit, right?

I’m partially impressed. The color recognition cameras are trivial, the fast step motors are a’ight I guess, and our entire generation takes microelectronics for granted, so whatevs. Nah, the software is what impresses me: the algorithm it uses to find short solutions — 21 turns in the video — that calculates during the milliseconds it took to physically twist the cube.

Let me take a moment to teach you some cube theory. There are 43 quintillion ways to scramble a Rubik’s Cube, but the maximum number of turns required to solve any scrambled cube — aka God’s number — is only 20. We mere humans are nowhere near that efficient. An average beginner’s solve uses about 140 turns. Speedcubers employ known methods to whittle the count down to ~55 turns (though we optimize for solve execution time). However, we’re constrained by how many patterns we can make our brains recognize or make our muscle memory execute before we start overloading our brain. Even Feliks, with all his ridiculous skill, I’d venture only knows at most 1,000-2,000 algorithms.

Feliks is so freakin’ fast, but I very much follow what he’s doing. Assemble a cross, insert pairs of blocks, then apply a 300ish algorithm library for encountered permutations. Simple.

Meanwhile, even if I slow down the Sub1 Reloaded video to frame-by-frame, I can’t follow at all. Like one moment it’s scrambled and the next it’s done, and there’s no discernible logic being applied. I even know that solving robots use the Kociemba method, which uses two steps: it reduces the cube from the 43 quintillion possible states to a subset containing 19 billion states, then it recursively follows a heuristic algorithm for pruning the cube state tree by guessing the expected length of remaining turns until it solves the cube. Huh?!

This makes me a little uncomfortable, but then again it’s just one of those things that computers do well, like memorizing numbers, or doing long division, or playing games like Chess or Go.

Radiology time!

Today I watched our chest radiologist, Dr. L, blaze through reading 70 chest x-rays (CXRs) in an hour. That is so, so, incredibly fast. During that time, he also bantered constantly with everyone else in the room, tinkered with his Sirius XM radio stations, and explicitly taught me tons about reading chest radiographs. It was nuts. I wanted to laud him as a “CXR-reading machine.”

But then I caught myself and my syntax. Yeah, Dr. L is so freakin’ fast, but there’s a key distinction between a CXR-reading wizard and a CXR-reading machine.

When I watch him work, I’m reminded of how I feel when watching Feliks solve cubes. Intimidated, inadequate, and hopelessly uninformed, but not totally lost. If I make Dr. L slow down to 1/10th his usual workflow, I can begin to catch the tiny little steps that he follows on his mental checklist. It even resembles the “search patterns” that every med student is taught, even the non-radiology ones. Irregular peripheries -> this; unexpected contours -> that; hazy fields -> maybe pneumonia. I can even say with confidence that if you give me a few years to train, I could probably become a CXR-reading wizard. Maybe not as fast, but still.

Meanwhile, I’m trying to imagine what it might look like to see an actual CXR-reading machine work. That is the direction in which radiology is going, after all: automated reads powered by computer vision and artificial intelligence. Will I be able to fathom the algorithm it uses? Or will it run its pixel matrices through neural networks that have developed algorithms that have no discernible equivalent in human search patterns? Or will we redesign our antiquated two-dimensional photon-sensitive plates and develop a whole new detector system and just let our AIs interpret x-ray vectors that our 3D-constrained maps can’t fathom? Or something that I can’t even imagine at all?

And, most importantly, will we be comfortable knowing that it’s computers reading CXRs that will dictate the medical care for people? Or will our distrust of non-human entities and their dastardly “algorithms” stop that?

Update 10/3: Part 2, How to Train your Rads AI.

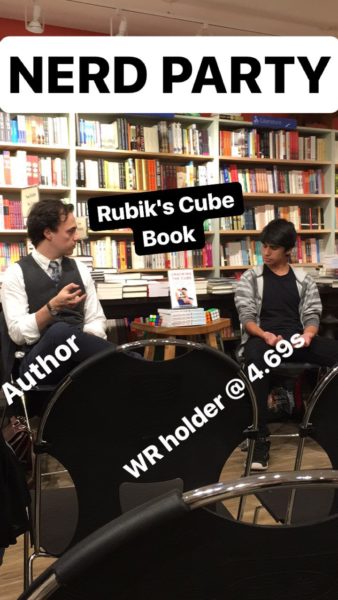

Update 9/19, the day after: look, I met Patrick Ponce! And Ian Scheffler (who I’ve met before), who’s written a book: a wonderful first-person account of the speedcubing world.